NVIDIA’s Blackwell AI Chip: Revolutionizing Deep Learning Acceleration

In the fast-evolving landscape of artificial intelligence (AI) and deep learning, NVIDIA has been at the forefront of innovation. The recent announcement from NVIDIA about its Blackwell AI chip marks a significant leap forward in the realm of deep learning acceleration. This cutting-edge chip is poised to revolutionize the way AI algorithms are trained and deployed, offering unparalleled performance and efficiency.

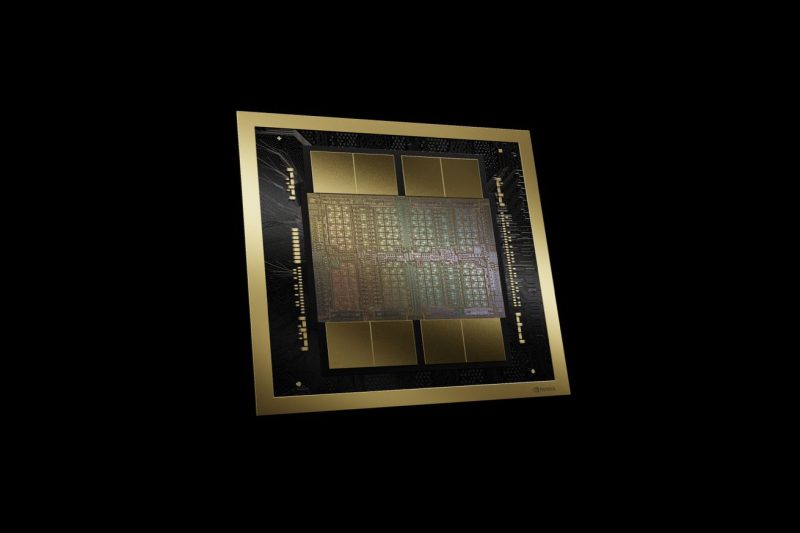

One of the key aspects of the Blackwell AI chip is its advanced architecture, specifically designed to meet the increasing computational demands of deep learning tasks. With a focus on maximizing throughput and minimizing latency, this chip sets a new standard for AI acceleration hardware. This is achieved through a combination of innovative design choices and optimizations that deliver exceptional performance across a wide range of AI workloads.

The Blackwell AI chip’s groundbreaking features include a highly parallelized architecture that leverages multiple cores to efficiently process neural network computations. This design enables the chip to handle complex AI models with ease, making it well-suited for tasks such as image recognition, natural language processing, and autonomous driving. Additionally, the chip incorporates specialized hardware accelerators that further enhance its performance, enabling faster training times and improved inference speeds.

Moreover, the Blackwell AI chip is built with scalability in mind, allowing for seamless integration into existing AI infrastructure. Whether deployed in data centers, edge devices, or autonomous vehicles, this chip promises to deliver consistent and reliable performance across diverse environments. By supporting a wide range of AI frameworks and libraries, the chip offers developers the flexibility to experiment with different models and algorithms without compromising on performance.

In addition to its technical capabilities, the Blackwell AI chip is also designed with energy efficiency in mind. By optimizing power consumption without sacrificing performance, NVIDIA has created a chip that strikes a delicate balance between computational power and energy efficiency. This not only translates to lower operational costs but also reduces the environmental impact of running AI workloads at scale.

Looking ahead, the Blackwell AI chip is set to play a crucial role in driving the next wave of AI innovation. With its unparalleled performance, scalability, and energy efficiency, this chip paves the way for new breakthroughs in deep learning research and applications. As NVIDIA continues to push the boundaries of AI hardware, the possibilities for advancing AI technology are seemingly endless.

In conclusion, the Blackwell AI chip represents a significant milestone in the evolution of deep learning acceleration. By combining cutting-edge technology with a focus on performance and efficiency, NVIDIA has set a new standard for AI hardware that is poised to reshape the future of artificial intelligence. With its advanced architecture, scalability, and energy efficiency, this chip provides a solid foundation for driving innovation in AI and unlocking new possibilities in the field of deep learning.